ACTUALIDAD

𝙎𝙀𝙉𝙎𝙄𝙉𝙂 𝙏𝙃𝙀 𝙐𝙉𝙎𝙋𝙊𝙆𝙀𝙉

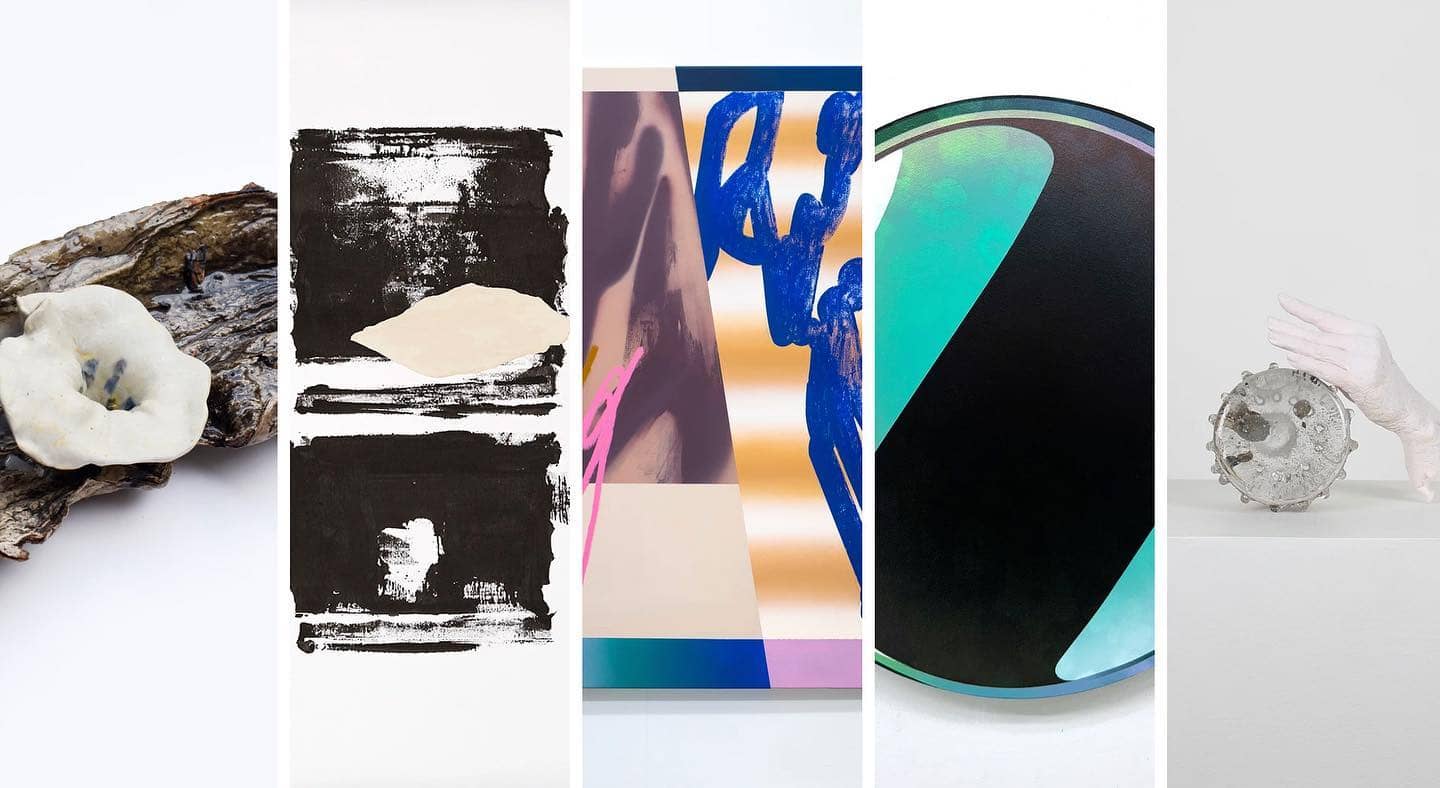

+ARTE & TIAN Contemporain se complace en presentar 𝙎𝙚𝙣𝙨𝙞𝙣𝙜 𝙩𝙝𝙚 𝙐𝙣𝙨𝙥𝙤𝙠𝙚𝙣, una muestra colectiva que presenta las obras de Raffaella Descalzi (Ecuador), Hidenori Ishii (EE.UU.), Yen-Chao Lin ( Canadá y Taiwán), Juan Miguel Marín (Estados Unidos) y Roberto Rivadeneira (Ecuador y Alemania). Organizada en colaboración con TIAN Contemporain (Montreal, Canadá), esta exposición colectiva se centra en el tema de la memoria y la conexión como punto de partida para navegar por las interrelaciones entre los individuos y su entorno social y natural.

Está bien no pensar en todas las cosas

Está bien no pensar en todas las cosas se sumerge en el terreno del error y la falla, y utiliza la incertidumbre para cuestionar los efectos de la sociedad en lxs cuerpxs no normativxs. A través del dibujo, la cerámica y el tejido, David transforma experiencias personales en piezas que fluyen, expanden y tuercen las percepciones sobre lxs cuerpxs marikas y enfermxs. Sus obras muestran mundos oníricos, cuerpos y montañas, proponiendo nuevas formas de resistencia y valorando la fragilidad como parte vital de la existencia en una sociedad que se dirige a su propia extinción (Santiago Ávila Albuja).

El césped es más verde del otro lado de la cerca

El concepto del proverbio “el césped es más verde del otro lado de la cerca” (the grass is always greener on the other side) se remonta a la poesía de Publius Ovidius Naso (siglo I a.C), más conocido como Ovidio, quien escribió: Fertilior seges est alenis semper in agris (la cosecha siempre es más fructífera en el campo de otro). Sin embargo, el proverbio tal como lo conocemos proviene de una canción popular estadounidense escrita por Raymond B. Egan y Richard A. Whiting en 1924. Una mirada rápida a la naturaleza de la humanidad sugiere que la envidia y la percepción errónea se remonta mucho antes que Ovidio y, sin duda, está presente en la actualidad...